The New Relic PostgreSQL on-host integration receives and sends inventory metrics from your PostgreSQL instance to the New Relic platform, where you can aggregate and visualize key performance metrics. Data from instances, databases, and clusters helps you find the source of problems.

Read on to install the integration, and to see what data we collect. If you don't have one already, create a New Relic account. It's free, forever.

Compatibility and requirements

Our integration is compatible with PostgreSQL 9.0 or higher.

If PostgreSQL is not running on Kubernetes or Amazon ECS, you can install the infrastructure agent on a Linux or Windows OS host where PostgreSQL is installed or on a host capable of remotely accessing where PostgreSQL is installed. Otherwise:

- If running on Kubernetes, see these requirements.

- If running on ECS, see these requirements.

Quick start

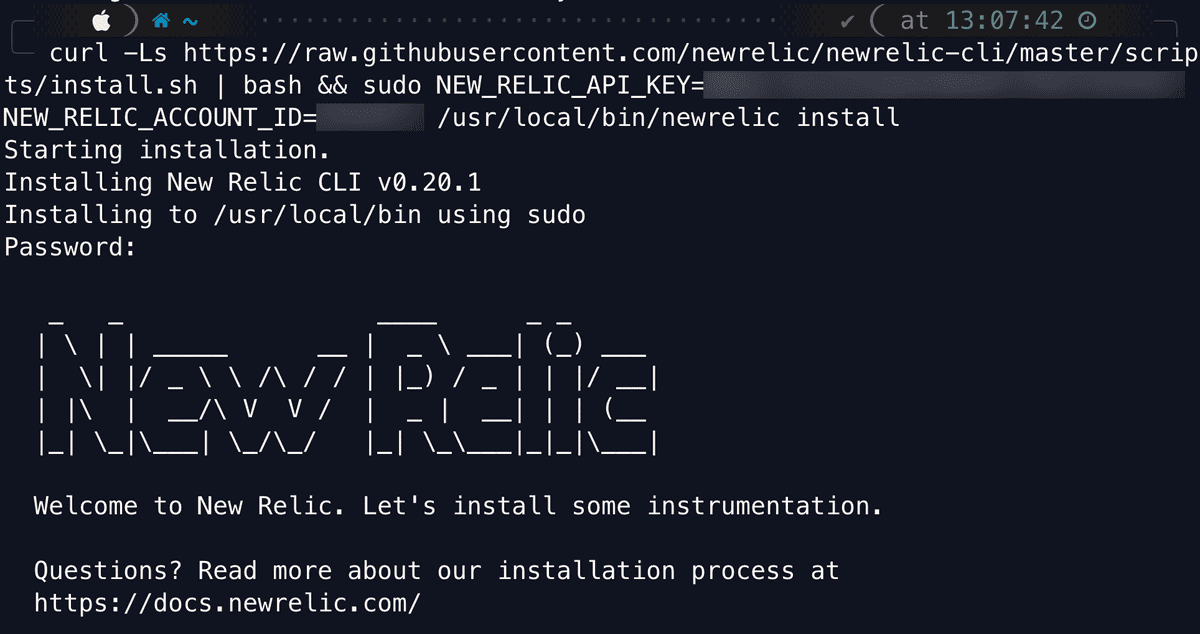

Instrument your PostgreSQL instance quickly and send your telemetry data with guided install. Our guided install creates a customized CLI command for your environment that downloads and installs the New Relic CLI and the infrastructure agent.

Ready to get started? Click one of these button to try it out.

Our guided install uses the infrastructure agent to set up the PostgreSQL integration. Not only that, it discovers other applications and log sources running in your environment and then recommends which ones you should instrument.

The guided install works with most setups. But if it doesn't suit your needs, you can find other methods below to get started monitoring your PostgreSQL instance.

Install and activate

To install the PostgreSQL integration, follow the instructions for your environment:

Additional notes:

- Advanced: Integrations are also available in tarball format to allow for install outside of a package manager.

- On-host integrations do not automatically update. For best results, regularly update the integration package and the infrastructure agent.

PostgreSQL users and permissions

Create a user with SELECT permissions on:

pg_stat_databasepg_stat_database_conflictspg_stat_bgwriter

You can complete this step before or after you configure the postgresql-config.yml file. To create the user for the PostgreSQL integration:

CREATE USER new_relic WITH PASSWORD 'PASSWORD';

GRANT SELECT ON pg_stat_database TO new_relic;

GRANT SELECT ON pg_stat_database_conflicts TO new_relic;

GRANT SELECT ON pg_stat_bgwriter TO new_relic;This will allow the integration to gather global metrics related to the PostgreSQL instance.

If you also want to obtain table and index-related metrics (for example, table size and index size), the PostgreSQL role used by the integration (new_relic) also needs SELECT permissions on the tables from which it will gather metrics from. For example, to allow the integration to collect metrics from all the tables and indexes present in the database (in the public schema), use the following:

GRANT SELECT ON ALL TABLES IN SCHEMA public TO new_relic;Configure the integration

An integration's YAML-format configuration is where you can place required login credentials and configure how data is collected. Which options you change depend on your setup and preference.

There are several ways to configure the integration, depending on how it was installed:

- If enabled via Kubernetes: see Monitor services running on Kubernetes.

- If enabled via Amazon ECS: see Monitor services running on ECS.

- If installed on-host: edit the config in the integration's YAML config file,

postgresql-config.yml.

Config options are below. For an example configuration, see the example config file.

The configuration file has common settings applicable to all integrations like interval, timeout, inventory_source. To read all about these common settings refer to our Configuration Format document.

Important

If you are still using our Legacy configuration/definition files please refer to this document for help.

Specific settings related to PostgreSQL are defined using the env section of the configuration file. These settings control the connection to your PostgreSQL instance as well as other security settings and features. The list of valid settings is described in the next section of this document.

PostgreSQL Instance Settings

The PostgreSQL integration collects both Metrics(M) and Inventory(I) information. Check the Applies To column below to find which settings can be used for each specific collection:

Setting | Description | Default | Applies To |

|---|---|---|---|

HOSTNAME | The hostname for the PostgreSQL connection. | localhost | M/I |

PORT | The port where PostgreSQL is running. | 5432 | M/I |

USERNAME | The user name for the PostgreSQL connection. Required. | N/A | M/I |

PASSWORD | The password for the PostgreSQL connection. Required. | N/A | M/I |

COLLECTION_LIST | JSON array, a JSON object, or the string literal Required except for PgBouncer. | N/A | M |

COLLECTION_IGNORE_DATABASE_LIST | JSON array of database names that will be ignored for metrics collection. Typically useful for cases where | '[]' | M |

PGBOUNCER | Collect | false | M |

ENABLE_SSL | Determines if SSL is enabled. If | false | M/I |

TRUST_SERVER_CERTIFICATE | If | false | M/I |

SSL_ROOT_CERT_LOCATION | Absolute path to PEM-encoded root certificate file. Required if | N/A | M/I |

SSL_CERT_LOCATION | Absolute path to PEM-encoded client certificate file. Required if | N/A | M/I |

SSL_KEY_LOCATION | Absolute path to PEM-encoded client key file. Required if | N/A | M/I |

TIMEOUT | maximum wait for connection, in seconds. Set to | 10 | M/I |

DATABASE | The PostgreSQL database to connect to. | postgres | M/I |

CUSTOM_METRICS_QUERY | A SQL query that required | N/A | M |

CUSTOM_METRICS_CONFIG | A path to a YAML file with a list of custom queries, along with their metric type, database, and sample name overrides. See example for details. | N/A | M |

COLLECT_DB_LOCK_METRICS | Enable collecting database lock metrics, which can be performance intensive. | false | M |

COLLECT_BLOAT_METRICS | Enable tablespace bloat metrics, which can be performance intensive. | true | M |

METRICS | Set to | false | |

INVENTORY | Set to | false |

The values for these settings can be defined in several ways:

- Adding the value directly in the config file. This is the most common way.

- Replacing the values from environment variables using the

{{}}notation. This requires infrastructure agent v1.14.0+. Read more here. - Using Secrets management. Use this to protect sensible information such as passwords to be exposed in plain text on the configuration file. For more information, see Secrets management.

Labels/Custom Attributes

Environment variables can be used to control config settings, such as your license key, and are then passed through to the Infrastructure agent. For instructions on how to use this feature, see Configure the Infrastructure agent.

You can further decorate your metrics using labels. Labels allow you to add key/value pairs attributes to your metrics which you can then use to query, filter or group your metrics on.

Our default sample config file includes examples of labels but, as they are not mandatory, you can remove, modify or add new ones of your choice.

labels: env: production role: postgresqlExample configuration

Example postgresql-config.yml file configuration:

For more about the general structure of on-host integration configuration, see Configuration.

Find and use data

Data from this service is reported to an integration dashboard.

Metrics are attached to these event types:

PostgresqlDatabaseSamplePostgresqlIndexSamplePostgresqlInstanceSamplePostgresqlTableSamplePgBouncerSample

You can query this data for troubleshooting purposes or to create custom charts and dashboards.

For more on how to find and use your data, see Understand integration data.

Metric data

The PostgreSQL integration collects the following database metric attributes. Some attributes apply to all PostgreSQL event types. Some metric names are prefixed with a category indicator and a period, such as db. or index. metric names.

PostgresqlDatabaseSample metrics

These attributes are attached to the PostgresqlDatabaseSample event type:

PostgreSQLDatabaseSample attributes | Description |

|---|---|

| Number of backends currently connected to this database. |

| The maximum number of concurrent connections to the database server. |

| Committed transactions per second. |

| Transactions rolled back per second. |

| Number of disk blocks read in this database per second. |

| Number of times disk blocks were found already in the buffer cache, so that a read was not necessary. This only includes hits in the PostgreSQL buffer cache, not the operating system's file system cache. |

| Rows returned by queries per second. |

| Rows fetched by queries per second. |

| Rows inserted per second. |

| Rows updated per second. |

| Rows deleted per second. |

| Number of queries in this database that have been canceled due to dropped tablespaces. |

| Number of queries in this database that have been canceled due to lock timeouts. |

| Number of queries in this database that have been canceled due to old snapshots. |

| Number of queries in this database that have been canceled due to pinned buffers. |

| Number of queries in this database that have been canceled due to deadlocks. |

| Number of temporary files created by queries in this database. All temporary files are counted, regardless of why the temporary file was created (for example, sorting or hashing), and regardless of the |

| Total amount of data written to temporary files by queries in this database. All temporary files are counted, regardless of why the temporary file was created, and regardless of the |

| Number of deadlocks detected in this database. |

| Time spent reading data file blocks by backends in this database, in milliseconds. |

| Time spent writing data file blocks by backends in this database, in milliseconds. |

PostgresqlIndexSample metrics

These attributes are attached to the PostgresqlIndexSample event type:

PostgreSQLIndexSample attributes | Description |

|---|---|

| The size of an index. |

| The number of index entries returned by scans on this index. |

| The number of index entries fetched by scans on this index. |

PostgresqlInstanceSample metrics

These attributes are attached to the PostgresqlInstanceSample event type:

PostgreSQLInstanceSample attributes | Description |

|---|---|

| Number of scheduled checkpoints that have been performed. |

| Number of requested checkpoints that have been performed. |

| Number of buffers written during checkpoints. |

| Number of buffers written by the background writer. |

| Number of times the background writer stopped a cleaning scan because it had written too many buffers. |

| Number of buffers written directly by a backend. |

| Number of buffers allocated. |

| Number of times a backend had to execute its own |

| Total amount of time that has been spent in the portion of checkpoint processing where files are written to disk, in milliseconds. |

| Total amount of time that has been spent in the portion of checkpoint processing where files are synchronized to disk, in milliseconds. |

PostgresqlTableSample metrics

These attributes are attached to the PostgresqlTableSample event type:

PostgreSQLTableSample attributes | Description |

|---|---|

| The total disk space used by the table, including indexes and TOAST data. |

| The total disk space used by indexes attached to the specified table. |

| Number of live rows. |

| Number of dead rows. |

| The number of disk blocks read from all indexes on this table. |

| The number of buffer hits in all indexes on this table. |

| The number of disk blocks read from this table's TOAST table index. |

| The number of buffer hits in this table's TOAST table index. |

| Time of last vacuum on table. |

| Time of last automatic vacuum on table. |

| Time of last analyze on table. |

| Time of last automatic analyze on table. |

| Number of sequential scans initiated on this table per second. |

| Number of live rows fetched by sequential scans per second. |

| Number of index scans initiated on this table. |

| Number of live rows fetched by index scans. |

| Rows inserted per second. |

| Rows updated per second. |

| Rows deleted per second. |

| Size of bloat in bytes. |

| Size of disk spaced used by the main fork of the table. |

| Fraction of table data size that is bloat. |

PgBouncerSample metrics

These attributes are attached to the PgBouncerSample event type:

PgBouncerSample attributes | Description |

|---|---|

| The transaction rate. |

| The query rate. |

| The total network traffic received. |

| The total network traffic sent. |

| Time spent by |

| Time spent by |

| The average number of transactions per second in last stat period. |

| The average transaction duration. |

| The average number of queries per second in last stat period. |

| The client network traffic received. |

| The client network traffic sent. |

| The average query duration. |

| Client connections linked to server connection and able to process queries. |

| Client connections waiting on a server connection. |

| Server connections linked to a client connection. |

| Server connections idle and ready for a client query. |

| Server connections idle more than |

| Server connections currently running either |

| Server connections currently in the process of logging in. |

| Age of oldest unserved client connection. |

Inventory data

The PostgreSQL integration collects each setting from pg_settings along with its boot_val and reset_val. The inventory data appears on the Inventory page, under the config/postgresql source.

Troubleshooting

Here are some troubleshooting tips for the PostgreSQL integration:

- If you have connection problems, make sure you can connect to the cluster from the same box with

psql. - If you have problems collecting

PgBouncermetrics, make sure you are connected to the instance throughPgBouncer. Default port is6432. - If you get the error message

Error creating list of entities to collect: pq: unsupported startup parameter: extra_float_digits, setignore_startup_parameters = extra_float_digitsin the PgBouncer config file.

Check the source code

This integration is open source software. That means you can browse its source code and send improvements, or create your own fork and build it.

For more help

If you need more help, check out these support and learning resources:

- Browse the Explorers Hub to get help from the community and join in discussions.

- Find answers on our sites and learn how to use our support portal.

- Run New Relic Diagnostics, our troubleshooting tool for Linux, Windows, and macOS.

- Review New Relic's data security and licenses documentation.